07 Mar 2016

Intro

The majority of the code I’ve written over the years has involved C#, Swift, Objective-C or Javascript. Along the way I’ve been reading quite a lot about, as well as dabbled in F#. Heck, I even went to a Clojure session at Devvox Belgium 2015.

Back in January I attended NDC London. Long story short, I ended up in Rob Conery’s session “Three and a half ways Elixir changed me (and other hyperbole)”. The session was interesting but it wasn’t until this week I started looking more closely at the language.

Writing my first Elixir code

The beginner part of the String Calculator kata seemed like a suitable starting point.

Creating the project

Mix is used, among other things, to create, compile and test the code, so I ran the following command:

$ mix new string_calculator

This created the directory string_calculator as well as the following files inside it:

* creating README.md

* creating .gitignore

* creating mix.exs

* creating config

* creating config/config.exs

* creating lib

* creating lib/string_calculator.ex

* creating test

* creating test/test_helper.exs

* creating test/string_calculator_test.exs

Writing some code

I opened test/string_calculator_test.exs and wrote my first test:

test "empty string returns 0" do

assert StringCalculator.add("") == 0

end

The simplest implementation I could think of was:

def add(numbers) do

0

end

The test(s) are executed by running mix test from the command line.

On to the second test:

test "handles one number" do

assert StringCalculator.add("1") == 1

end

Elixir functions are identified by name/arity (number of arguments) and pattern matching is done based on the passed arguments, when attempting to find a suitable function to invoke.

I also remembered reading about named functions supporting both do: and do/end block syntax here.

Based on this, my implementation ended up like this:

def add(""), do: 0

def add(numbers) do

String.to_integer(numbers)

end

This was confusing and awesome at the same time, considering the programming languages I’m used to.

- The first one will be matched for empty strings.

- The second will be matched for non-empty strings.

Let that sink in and let’s continue with the third test:

test "handles two numbers" do

assert StringCalculator.add("1,2") == 3

end

I’ve been using LINQ as well as lambda expressions extensively in C# over the years, so the anonymous functions felt natural to me. Since I’ve also dabbled in F#, I quickly remembered the pipe operator in Elixir.

This was my first attempt:

def add(numbers) do

numbers

|> String.split(",")

|> Enum.map(fn(x) -> String.to_integer(x) end)

|> Enum.reduce(fn(x, acc) -> x + acc end)

end

Not too bad, but I had a feeling that the map function could be removed. Quite right, there’s reduce/3 which makes it possible to set the initial value of the accumulator:

def add(numbers) do

numbers

|> String.split(",")

|> Enum.reduce(0, fn(x, acc) -> String.to_integer(x) + acc end)

end

For reference, this is the complete implementation so far:

defmodule StringCalculator do

def add(""), do: 0

def add(numbers) do

numbers

|> String.split(",")

|> Enum.reduce(0, fn(x, acc) -> String.to_integer(x) + acc end)

end

end

On to the next requirement:

test "handles more than two numbers" do

assert StringCalculator.add("1,2,3,4,5") == 15

end

The implementation already handled this requirement, so I continued with the next one:

test "handles newlines between numbers" do

assert StringCalculator.add("1\n2,3") == 6

end

I looked at the documentation for String.split/1 and found this:

The pattern can be a string, a list of strings or a regular expression.

The implementation required a small change to make the new test pass:

def add(numbers) do

numbers

|> String.split([",", "\n"])

|> Enum.reduce(0, fn(x, acc) -> String.to_integer(x) + acc end)

end

Moving on to the next requirement:

test "handles custom delimiters" do

assert StringCalculator.add("//;\n1;2") == 3

end

This is what I came up with, excluding the function that handles empty strings:

def add("//" <> rest) do

[delimiter, numbers] = String.split(rest, "\n")

add(numbers, [delimiter])

end

def add(numbers) do

add(numbers, [",", "\n"])

end

defp add(numbers, delimiters) do

numbers

|> String.split(delimiters)

|> Enum.reduce(0, fn(x, acc) -> String.to_integer(x) + acc end)

end

I knew string concatenation was done with <>, but this Stack Overflow answer made me realize I could use it like in the first function above.

I also made the last function private by using defp instead of def. There should be no add/2 function available to the consumer.

Another thing to note is that the order of the functions matter, when it’s looking for functions to match. So if we for instance swap the order of the first two functions above, the code will break. This is because add(numbers) will match all non-empty strings that are passed as an argument.

On to the final test. First I had to figure out how to test for errors. In the docs I found assert_raise/3:

test "throws error when passed negative numbers" do

assert_raise ArgumentError, "-1, -3", fn ->

StringCalculator.add("-1,2,-3")

end

end

My initial attempt:

defp add(numbers, delimiters) do

numbers

|> String.split(delimiters)

|> check_for_negatives

|> Enum.reduce(0, fn(x, acc) -> String.to_integer(x) + acc end)

end

defp check_for_negatives(numbers) do

negatives = Enum.filter(numbers, fn(x) -> String.to_integer(x) < 0 end)

if length(negatives) > 0, do: raise ArgumentError, message: Enum.join(negatives, ", ")

numbers

end

It converted the strings to integers twice, which I wasn’t happy with, so I ended up with this:

defp add(numbers, delimiters) do

numbers

|> String.split(delimiters)

|> Enum.map(fn(x) -> String.to_integer(x) end)

|> check_for_negatives

|> Enum.reduce(0, fn(x, acc) -> x + acc end)

end

defp check_for_negatives(numbers) do

negatives = Enum.filter(numbers, fn(x) -> x < 0 end)

if length(negatives) > 0, do: raise ArgumentError, message: Enum.join(negatives, ", ")

numbers

end

I also remembered reading about partial application along the way, which I’d seen in F#.

Elixir supports partial application of functions which can be used to define anonymous functions in a concise way

I decided to give it a go and my string calculator ended up like this:

defmodule StringCalculator do

def add(""), do: 0

def add("//" <> rest) do

[delimiter, numbers] = String.split(rest, "\n")

add(numbers, [delimiter])

end

def add(numbers) do

add(numbers, [",", "\n"])

end

defp add(numbers, delimiters) do

numbers

|> String.split(delimiters)

|> Enum.map(&String.to_integer(&1))

|> check_for_negatives

|> Enum.reduce(0, &(&1 + &2))

end

defp check_for_negatives(numbers) do

negatives = Enum.filter(numbers, &(&1 < 0))

if length(negatives) > 0, do: raise ArgumentError, message: Enum.join(negatives, ", ")

numbers

end

end

Looking forward to learning more about Elixir!

Source code

The source code can be found here.

27 Dec 2015

A while ago I got tired of manually configuring my C# projects to allow for easy semantic versioning and decided to automate the steps involved.

I created a small Node.js command line tool which does following things:

- Creates a text file (default:

version.txt) in the project folder which contains the version number (default: 0.1.0).

- Copies a

BuildCommon.targets file to {project-root}/build/BuildCommon.targets which is used to extend the build/clean process.

- Adds a reference to the

BuildCommon.targets file in the project file.

- Comments out existing version related attributes in

AssemblyInfo.cs.

I can then change the version number in version.txt to 1.0.0-rc1, 1.0.0 etc., build the project, and the assembly will have the correct version set.

Extending the build process

The build process is extended by overriding the CompileDependsOn property and injecting the custom task before the original property.

The UpdateVersion task creates AssemblyVersion.cs in the intermediate output path ($(IntermediateOutputPath)) and adds the version related attributes to it. The values are read from the text file created in step 1 above.

<PropertyGroup>

<CompileDependsOn>

UpdateVersion;

$(CompileDependsOn);

</CompileDependsOn>

</PropertyGroup>

The created file is also added to the build process.

<Compile Include="$(IntermediateOutputPath)AssemblyVersion.cs" />

Extending the clean process

MSBuild maintains a list of the files that should be removed when cleaning the project, in the item list FileWrites.

<FileWrites Include="$(IntermediateOutputPath)AssemblyVersion.cs"/>

Source code and npm package

GitHub

npm package

07 Apr 2015

Chef Server

At the time of writing this Chef and Microsoft recently released a ready to use Chef Server in the Azure Marketplace. Unfortunately it’s not offered in all countries/regions, so it might not work with your subscription.

The steps below show how to manually install Chef Server 12 on CentOS 6.x and will work whether you use Azure or not.

Download package

$ wget https://web-dl.packagecloud.io/chef/stable/packages/el/6/chef-server-core-12.0.7-1.el6.x86_64.rpm

Alternatively you can go to https://downloads.chef.io/chef-server/, pick “Red Hat Enterprise Linux” and download the package for “Red Hat Enterprise Linux 6”.

Install package

$ sudo rpm -Uvh chef-server-core-12.0.7-1.el6.x86_64.rpm

Start the services

$ sudo chef-server-ctl reconfigure

Create an administrator

$ sudo chef-server-ctl user-create <username> <first-name> <last-name> <email> <password> --filename <FILE-NAME>.pem

An RSA private key is generated automatically. This is the user’s private key and should be saved to a safe location. The –filename option will save the RSA private key to a specified path.

Create an organization

$ sudo chef-server-ctl org-create <short-name> <full-organization-name> --association_user <username> --filename <FILE_NAME>.pem

The –association_user option will associate the <username> with the admins security group on the Chef server.

An RSA private key is generated automatically. This is the chef-validator key and should be saved to a safe location. The –filename option will save the RSA private key to a specified path.

Check the host name

This should return the FQDN:

If they differ, run the following commands:

$ sudo hostname <HOSTNAME>

$ echo "<HOSTNAME>" | sudo tee /etc/hostname

$ sudo bash -c "echo 'server_name = \"<HOSTNAME>\"

api_fqdn server_name

nginx[\"url\"] = \"https://#{server_name}\"

nginx[\"server_name\"] = server_name

lb[\"fqdn\"] = server_name

bookshelf[\"vip\"] = server_name' > /etc/opscode/chef-server.rb"

$ sudo opscode-manage-ctl reconfigure

$ sudo chef-server-ctl reconfigure

Premium features

Premium features are free for up to 25 nodes.

Management console

On Azure the management console will be available at https://your-host-name.cloudapp.net.

Use Chef management console to manage data bags, attributes, run-lists, roles, environments, and cookbooks from a web user interface.

$ sudo chef-server-ctl install opscode-manage

$ sudo opscode-manage-ctl reconfigure

$ sudo chef-server-ctl reconfigure

Reporting

Use Chef reporting to keep track of what happens during every chef-client runs across all of the infrastructure being managed by Chef. Run Chef reporting with Chef management console to view reports from a web user interface.

$ sudo chef-server-ctl install opscode-reporting

$ sudo opscode-reporting-ctl reconfigure

$ sudo chef-server-ctl reconfigure

Chef Development Kit

This will install the Chef Development kit on your Windows workstation.

Go to https://downloads.chef.io/chef-dk/windows/#/ and download the installer.

The default installation options will work just fine.

Starter kit

If you’re new to Chef, this is the easiest way to start interacting with the Chef server. Manual methods of setting up the chef-repo may be found here.

We’ll take the easy route so open the management console and download the starter kit from the “Administration” section. Extract the files your a suitable location on your machine, I’ll assume you’re extracting the contents to c:\.

SSL certificate

This step is only necessary if you’re using a self-signed certificate.

Chef Server automatically generates a self-signed certificate during the installation. To interact with the server we have to put it into the trusted certificates directory, which is done using the following command:

c:\chef-repo>knife ssl fetch

Azure publish settings

If you’re using Azure, download your publish settings here, place the file in the .chef directory and add the following line to knife.rb:

knife[:azure_publish_settings_file] = "#{current_dir}/<FILENAME>.publishsettings"

and also install the Knife Azure plugin:

c:\chef-repo>chef gem install knife-azure

A knife plugin to create, delete, and enumerate Microsoft Azure resources to be managed by Chef.

Check installation

Chef Client

Azure portal

Provision a new VM and add the Chef Client extension. The extension isn’t supported on CentOS at the moment, it will only work with Windows or Ubuntu images. This can be very confusing if you’re not aware of it, since it’s allowed, but the bootstrapping will fail.

Validation key

Use the <ORGANIZATION_NAME>-validator.pem file from your workstation or the .pem-file you created when creating an organization above.

Client.rb

If you’re using a self-signed certificate:

chef_server_url "https://<FQDN>/organizations/<ORGANIZATION_NAME>"

validation_client_name "<ORGANIZATION_NAME>-validator"

ssl_verify_mode :verify_none

If not:

chef_server_url "https://<FQDN>/organizations/<ORGANIZATION_NAME>"

validation_client_name "<ORGANIZATION_NAME>-validator"

Run list (optional)

Add the cookbooks, recipes or role that you want to run.

Instructions on how to install the xplat-cli can found here.

Linux

Create VM

azure vm create --vm-size <VM-SIZE> --vm-name <VM-NAME> --ssh <SSH-PORT> <IMAGE-NAME> <USERNAME> <PASSWORD>

- Add

--connect <FQDN> if you want to connect it to existing VMs.

- CentOS images:

azure vm image list | grep CentOS.

- Ubuntu LTS images:

azure vm image list | grep "Ubuntu.*LTS" | grep -v DAILY.

Bootstrap node

knife bootstrap <FQDN> -p <SSH-PORT> -x <USERNAME> -P <PASSWORD> --sudo

- Add

--node-ssl-verify-mode none if you’re using a self-signed certificate.

- Add

--no-host-key-verify if you don’t want to verify the host key.

Windows

Create VM

azure vm create --vm-size <VM-SIZE> --vm-name <VM-NAME> --rdp <RDP-PORT> <IMAGE-NAME> <USERNAME> <PASSWORD>

Add --connect <FQDN> if you want to connect it to existing VMs.

Windows Server 2012 images: azure vm image list | grep "Windows-Server-2012" | grep -v "Essentials\|Remote\|RDSH".

Bootstrap node

Before bootstrapping the node we’ll have to configure WinRM.

Start a PowerShell session on the node and run the following commands:

This configuration should not be used in a production environment.

c:\>winrm qc

c:\>winrm set winrm/config/winrs '@{MaxMemoryPerShellMB="300"}'

c:\>winrm set winrm/config '@{MaxTimeoutms="1800000"}'

c:\>winrm set winrm/config/service '@{AllowUnencrypted="true"}'

c:\>winrm set winrm/config/service/auth '@{Basic="true"}'

The first command performs the following operations:

- Starts the WinRM service, and sets the service startup type to auto-start.

- Configures a listener for the ports that send and receive WS-Management protocol messages using either HTTP or HTTPS on any IP address.

- Defines ICF exceptions for the WinRM service, and opens the ports for HTTP and HTTPS.

If we’re not on the same subnet we also have to change the “Windows Remote Management” firewall rule.

Back on the workstation, create a WinRM endpoint:

c:\chef-repo>azure vm endpoint create -n winrm <VM-NAME> <PUBLIC-PORT> 5985

Finally we can do the bootstrapping:

c:\chef-repo>knife bootstrap windows winrm <FQDN> --winrm-user <USERNAME> --winrm-password <PASSWORD> --winrm-port <WINRM-PORT>

- Add

--node-ssl-verify-mode none if you’re using a self-signed certificate.

Knife Azure

When setting up our workstation above we installed the Knife Azure plugin. This allows us to provision a VM in Azure as well as bootstrap it in one step.

Here’s an example using a CentOS 7.0 image, which will be connected to an existing resource group:

c:\chef-repo>knife azure server create --azure-service-location '<service-location>' --azure-connect-to-existing-dns --azure-dns-name '<dns-name>' --azure-vm-name '<vm-name>' --azure-vm-size '<vm-size>' --azure-source-image '5112500ae3b842c8b9c604889f8753c3__OpenLogic-CentOS-70-20150325' --ssh-user <ssh-user> --ssh-password <ssh-password> --bootstrap-protocol 'ssh'

Resources

06 Jan 2015

For reference, here are the versions I used:

Mono: 3.10.0

KVM: Build 10017

KRE: 1.0.0-beta1

libuv: commit 3fd823ac60b04eb9cc90e9a5832d27e13f417f78

I created a new VM in Azure and used the image provided by OpenLogic. It contains an installation of the Basic Server packages.

Install Mono

Add the Mono Project GPG signing key:

$ sudo rpm --import "http://keyserver.ubuntu.com/pks/lookup?op=get&search=0x3FA7E0328081BFF6A14DA29AA6A19B38D3D831EF"

Install yum utilities:

$ sudo yum install yum-utils

Add the Mono package repository:

$ sudo yum-config-manager --add-repo http://download.mono-project.com/repo/centos/

Install the mono-complete package:

$ sudo yum install mono-complete

Mono on Linux by default doesn’t trust any SSL certificates so you’ll get errors when accessing HTTPS resources. To import Mozilla’s list of trusted certificates and fix those errors, you need to run:

$ mozroots --import --sync

Install KVM

$ curl -sSL https://raw.githubusercontent.com/aspnet/Home/master/kvminstall.sh | sh && source ~/.kre/kvm/kvm.sh

Install the K Runtime Environment (KRE)

Running the samples

Install Git:

Clone the Home repository:

$ git clone https://github.com/aspnet/Home.git

$ cd Home/samples

Change directory to the folder of the sample you want to run.

ConsoleApp

Restore packages:

Run it:

That was easy!

HelloMvc

Restore packages:

Run it:

$ k kestrel

System.DllNotFoundException: libdl

(Removed big stack trace)

Ouch, so I went hunting for libdl:

$ sudo find / -name libdl*

/usr/lib64/libdl.so.2

/usr/lib64/libdl-2.17.so

Create symbolic link:

$ sudo ln -s /usr/lib64/libdl.so.2 /usr/lib64/libdl

Run it again:

$ k kestrel

System.NullReferenceException: Object reference not set to an instance of an object

at Microsoft.AspNet.Server.Kestrel.Networking.Libuv.loop_size () [0x00000] in <filename unknown>:0

at Microsoft.AspNet.Server.Kestrel.Networking.UvLoopHandle.Init (Microsoft.AspNet.Server.Kestrel.Networking.Libuv uv) [0x00000] in <filename unknown>:0

at Microsoft.AspNet.Server.Kestrel.KestrelThread.ThreadStart (System.Object parameter) [0x00000] in <filename unknown>:0

Progress, but now we need to get libuv working.

Install libuv

$ sudo yum install gcc

$ sudo yum install automake

$ sudo yum install libtool

$ git clone https://github.com/libuv/libuv.git

$ cd libuv

$ sh autogen.sh

$ ./configure

$ make

$ make check

$ sudo make install

Run it again:

$ k kestrel

System.NullReferenceException: Object reference not set to an instance of an object

at Microsoft.AspNet.Server.Kestrel.Networking.Libuv.loop_size () [0x00000] in <filename unknown>:0

at Microsoft.AspNet.Server.Kestrel.Networking.UvLoopHandle.Init (Microsoft.AspNet.Server.Kestrel.Networking.Libuv uv) [0x00000] in <filename unknown>:0

at Microsoft.AspNet.Server.Kestrel.KestrelThread.ThreadStart (System.Object parameter) [0x00000] in <filename unknown>:0

I knew it had to be a path issue or something, so I went hunting for libuv:

$ sudo find / -name libuv.so

/home/trydis/libuv/.libs/libuv.so

/usr/local/lib/libuv.so

I then checked the library name Kestrel was looking for on Linux here and based on that i created a symbolic link:

$ sudo ln -s /usr/local/lib/libuv.so /usr/lib64/libuv.so.1

Run it again:

Navigate to http://your-web-server-address:5004/ and pat yourself on the back!

HelloWeb

Restore packages:

Run it:

Navigate to http://your-web-server-address:5004/.

03 Jan 2015

Here I’ll take a look at how we can create a Roslyn code refactoring using Visual Studio 2015 Preview.

We’ll make a code refactoring that introduces and initializes a field, based on a constructor argument. If you’ve used Resharper you know what I’m talking about.

Creating the project

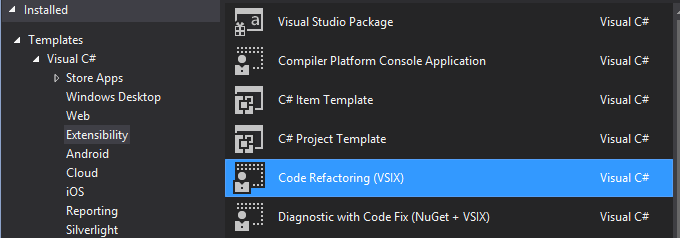

Fire up Visual Studio, go to File -> New Project (CTRL + Shift + N) and pick “Code Refactoring (VSIX)” under “Extensibility”:

The newly created solution consists of two projects:

- The VSIX package project

- The code refactoring project

If we look inside the “CodeRefactoringProvider.cs” file of the code refactoring project, we’ll see that it includes a complete code refactoring as a starting point. In its simplest form implementing a code refactoring consists of three steps:

- Find the node that’s selected by the user

- Check if it’s the type of node we’re interested in

- Offer our code refactoring if it’s right type

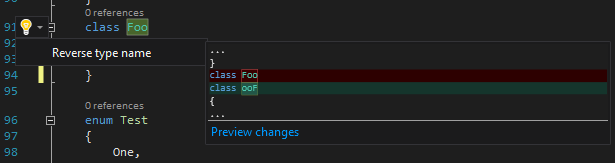

We can test/debug the default implementation by simply pressing F5, as with any other type of project. This will start an experimental instance of Visual Studio and deploy our VSIX content. We can then open a project, place our cursor on a class definition and click the light bulb (shortcut: CTRL + .). As we can see in the picture below the “Reverse type name” code refactoring is presented as well as a preview of the changes.

Implementing our refactoring

It’s worth mentioning the Roslyn Syntax Analyzer, which is a life saver when trying to wrap your head around syntax trees, syntax nodes, syntax tokens, syntax trivia etc.

Here’s the body of our new ComputeRefactoringsAsync method, let’s go through it:

var root = await context.Document

.GetSyntaxRootAsync(context.CancellationToken)

.ConfigureAwait(false);

var node = root.FindNode(context.Span);

var parameter = node as ParameterSyntax;

if (parameter == null)

{

return;

}

var parameterName = RoslynHelpers.GetParameterName(parameter);

var underscorePrefix = "_" + parameterName;

var uppercase = parameterName.Substring(0, 1)

.ToUpper() +

parameterName.Substring(1);

if (RoslynHelpers.VariableExists(root, parameterName, underscorePrefix, uppercase))

{

return;

}

var action = CodeAction.Create(

"Introduce and initialize field '" + underscorePrefix + "'",

ct =>

CreateFieldAsync(context, parameter, parameterName, underscorePrefix, ct));

context.RegisterRefactoring(action);

As before we’re getting the node that’s selected by the user, but this time we’re only interested if it’s a node of type ParameterSyntax. I used the Roslyn Syntax Analyzer mentioned earlier to figure out what to look for.

Once we’ve found the correct node type we extract the parameter name and create different variations of it, to search for existing fields with those names. If the parameter name is “bar”, we’ll look for that as well as “_bar” and “Bar”.

if none of the variables exist we register our refactoring.

Now for the second and somewhat more tricky part, the actual refactoring logic. RoslynQouter is of great help here, since it shows what API calls we need to make to construct the syntax tree for a given program.

Here’s the code, we’ll go through it below:

private async Task<Document> CreateFieldAsync(

CodeRefactoringContext context, ParameterSyntax parameter,

string paramName, string fieldName,

CancellationToken cancellationToken)

{

var oldConstructor = parameter

.Ancestors()

.OfType<ConstructorDeclarationSyntax>()

.First();

var newConstructor = oldConstructor

.WithBody(oldConstructor.Body.AddStatements(

SyntaxFactory.ExpressionStatement(

SyntaxFactory.AssignmentExpression(

SyntaxKind.SimpleAssignmentExpression,

SyntaxFactory.IdentifierName(fieldName),

SyntaxFactory.IdentifierName(paramName)))));

var oldClass = parameter

.FirstAncestorOrSelf<ClassDeclarationSyntax>();

var oldClassWithNewCtor =

oldClass.ReplaceNode(oldConstructor, newConstructor);

var fieldDeclaration = RoslynHelpers.CreateFieldDeclaration(

RoslynHelpers.GetParameterType(parameter), fieldName);

var newClass = oldClassWithNewCtor.WithMembers(

oldClassWithNewCtor.Members

.Insert(0, fieldDeclaration))

.WithAdditionalAnnotations(Formatter.Annotation);

var oldRoot = await context.Document

.GetSyntaxRootAsync(cancellationToken)

.ConfigureAwait(false);

var newRoot = oldRoot.ReplaceNode(oldClass, newClass);

return context.Document.WithSyntaxRoot(newRoot);

}

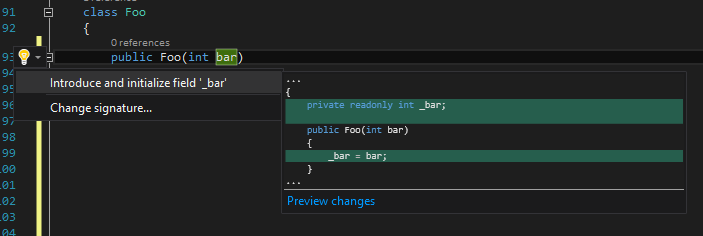

First we find the original class constructor, which contains our parameter.

We use that and add an assignment expression in the body of the modified constructor. So if the original constructor was like this:

the assignment expression results in this:

public Foo(int bar)

{

_bar = bar;

}

We take the old class declaration and create a new one with the constructor replaced.

Then we create the field declaration:

private readonly int _bar;

Finally we insert the field declaration as the first member in our new class declaration, format our code and return the new document.

The final result

The result will not put Resharper out of business any time soon, but I’m happy that it was quite easy to get started :-)

The “RoslynHelpers” class i created could be replaced with extension methods, but checking out Roslyn was my main focus here.

The code is available here.